Data Centre AI evolution: combining MAAS and NVIDIA smart NICs

Benjamin Ryzman

on 21 June 2024

Tags: AI , bare-metal provisioning , cloud , data center , Data center networking , Data centre , MAAS , network , networking , smartnic

It has been several years since Canonical committed to implementing support for NVIDIA smart NICs in our products. Among them, Canonical’s metal-as-a-service (MAAS) enables the management and control of smart NICs on top of bare-metal servers. NVIDIA BlueField smart NICs are very high data rate network interface cards providing advanced software-defined data centre infrastructure services. They include high performance specialised network ASICs (application-specific integrated circuits) and powerful general purpose CPUs and RAM. These cards provide network function acceleration, hardware offloading and isolation that enable innovative network, security and storage use cases.

From integrating kernel drivers to deploying and managing the life-cycle of the software running on the cards themselves, Canonical products treat DPUs as first class citizens. Our operating system and infrastructure software enable all the features and benefits of these cards. Indeed, these programmable accelerators bring services that are key to Canonical data centre networking strategy.

It is no surprise, then, that we are very excited about NVIDIA’s BlueField-3. This addition to the BlueField line of DPUs (Data Processing Unit) and Super NICs is integral to NVIDIA’s Spectrum-X, their AI Cloud reference architecture.

In this blog post, we are going to discuss how modern AI workloads challenge the common data centre network technologies. We will introduce NVIDIA’s Spectrum-X, which combines their BlueField-3 DPUs and Super NICs with their Spectrum-4 line of Ethernet switches to solve these challenges. In this article, we will detail the extent of Canonical’s software support for this hardware.

How AI training puts pressure on Ethernet

Distributed deep learning computation steps

Data centre operators, enterprises or cloud providers need a coherent and future-proof approach to managing workloads and interconnecting them. Canonical stands ready to support green field initiatives in the domain, especially as AI and LLM (Large Language Models) revolutionise the approaches used in the past.

Distributed deep learning is achieved through three major computation steps:

- Computing the gradient of the loss function on each GPU running on each node in the distributed system,

- Computing the mean of the gradients by inter-GPU communication over the network,

- Updating the model.

The second step, known as the ‘Allreduce’ distributed algorithm, is by far the most demanding when it comes to the network.

RDMA on Converged Ethernet congestion challenges

RDMA (remote direct-memory access) is a mechanism which enables sharing memory between software processes connected over the network as if they were running on a single computer. An application of RDMA is high bandwidth, low latency Direct GPU to GPU transfer over the network. It is a key requirement to reduce tail latency of the Allreduce parallel algorithm.

Ethernet is the most common interconnect technology. However, as we’ve discussed in our white paper, it is designed to be a best-effort network that may experience packet loss when the network or devices are busy. Congestion tends to occur as a result of large simultaneous data transfers between nodes in the context of highly distributed parallel processing required for high-performance computing (HPC) and deep learning applications. This congestion leads to packet loss, which is highly detrimental to the performance of RDMA over converged Ethernet (RoCE). Multiple Ethernet extensions try to tackle this problem, requiring additional processing in all the components of the Ethernet fabric, servers, switches or routers.

Ethernet best-effort shortcomings especially impact multi-tenant hyperscale AI clouds which can support several AI training workloads running at the same time. The only other alternative, InfiniBand, is a proven networking technology for highly parallelised workloads, and particularly RDMA, but can limit the return on investment in this context due to Ethernet economies of scale.

To support even the most demanding workloads, Canonical regularly updates its operating system and infrastructure tooling to enable the latest performance improvements and features. As a result, we closely follow our hardware partners’ innovations and ensure the best, most timely support for them, at every layer of the stack. This can be exemplified with MAAS support for BlueField-3, a key component of NVIDIA’s proposed solution to Ethernet limitations regarding multi-tenant AI workloads.

NVIDIA AI Cloud components

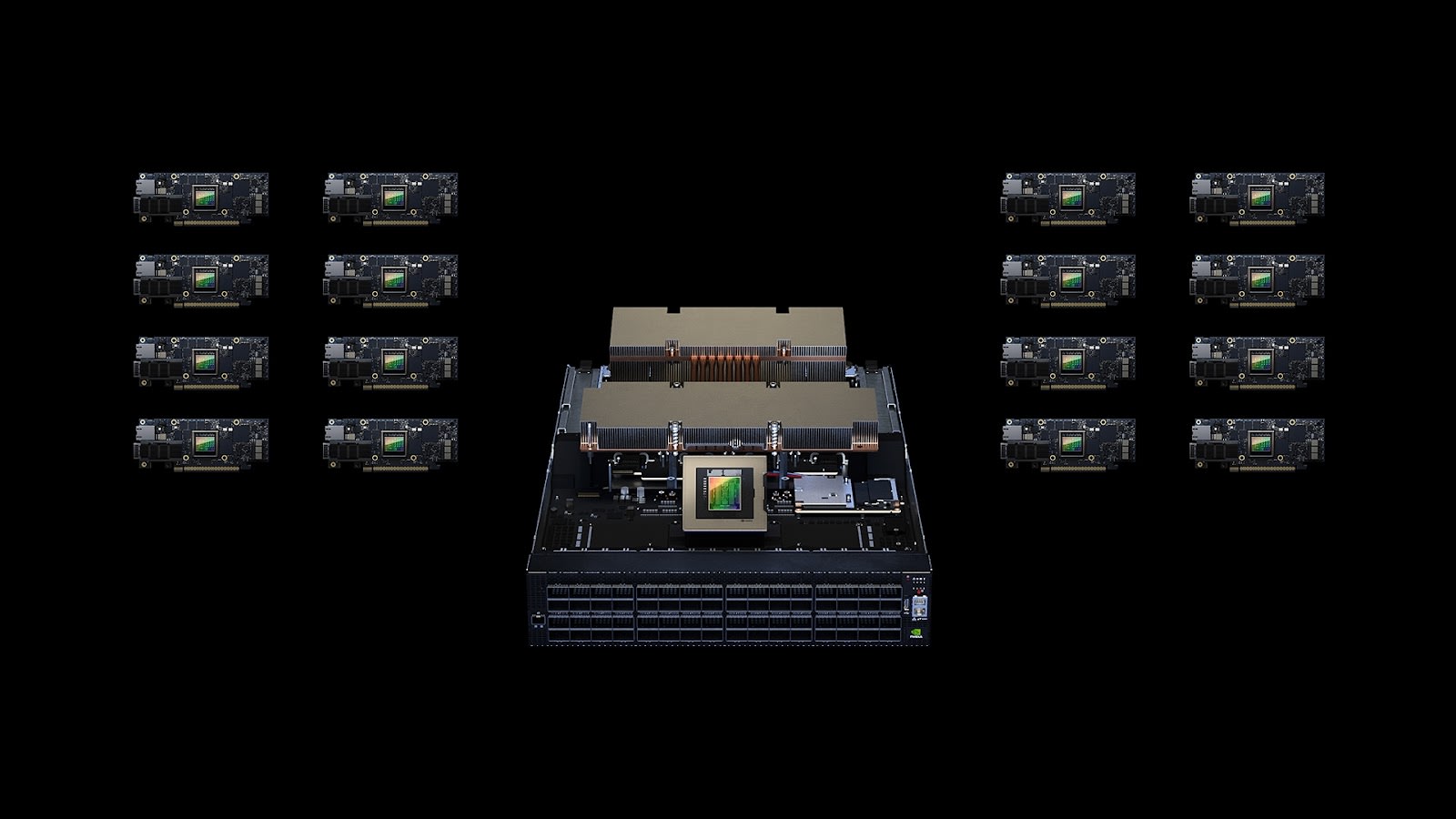

With Spectrum-X, NVIDIA proposes one of the first end-to-end Ethernet solutions designed for multi-tenant environments where multiple AI jobs run simultaneously. Spectrum-X consists of several software innovations that rely on recently launched hardware: Spectrum-4 Ethernet switches and BlueField-3 based DPUs and Super NICs.

NVIDIA Spectrum-X

NVIDIA Spectrum-4 Ethernet switches

Part of the Spectrum-X Network Platform Architecture, the Spectrum SN5600 is the embodiment of the Spectrum-4 latest line of NVIDIA Ethernet switches. It complements top switching capacity with Ethernet extensions to achieve unrivalled RoCE performance when combined with BlueField-3 DPU and Super NICs.

| Switching capacity | 51.2 Tb/s (terabits per second).33.3 Bpps (billion packets per second). |

| 400GbE (Gigabit Ethernet) ports | 128 |

| 800GbE (Gigabit Ethernet) ports | 64 |

| Adaptive routing with advanced congestion control | Per packet load-balancing across the whole fabric.End-to-end telemetry with nanosecond-level timing precision from switch to host.Dynamic reallocation of packet paths and queue behaviour. |

| Universal shared buffer design | Bandwidth fairness across different-sized flows, protecting workloads from “noisy neighbours.” |

NVIDIA BlueField-3 DPU and Super NICs

A major evolution of the BlueField line, the NVIDIA BlueField-3 networking platform offers speeds up to 400 Gb/s (gigabits per second). It is purpose built to support network-intensive, massively parallel computing and hyperscale AI workloads. BlueField-3 DPUs act as the trusted management isolation point in the AI cloud. Moreover, BlueField-3 Super NICs are tightly coupled with the servers GPU and the Spectrum-X Ethernet switches. Together, they deliver markedly better network performance for LLM and deep learning training. At the same time, they improve power efficiency and ensure predictable performance in multi-tenant environments.

| Feature | Advantage |

| Congestion avoidance mechanisms offloading | Frees up GPU servers to focus on AI learning. |

| NVIDIA Direct Data Placement | Place out-of-order load-balanced packet due to adaptive routing in the correct order in the host/GPU memory. |

| Management and control of the senders data injection rate | Processes telemetry information sent by Spectrum SN5600 switches to maximise network sharing efficiency. |

With Spectrum-X, NVIDIA offers a purpose-built networking platform for demanding AI applications, with a range of benefits over traditional Ethernet.

How combining MAAS with NVIDIA smart NICs demonstrates data centre AI evolution at Canonical

Canonical’s metal-as-a-service software (MAAS) provides large-scale data centre network and server infrastructure automation. It turns the management of bare-metal resources into a cloud-like environment. To leverage the NVIDIA Spectrum-X solution to the Ethernet AI bottleneck, BlueField-3 cards’ operating system and network function software need to be provisioned and configured appropriately. It is no surprise then that MAAS is updated to assist with BlueField-3 provisioning and lifecycle management.

Designing your data centre infrastructure to take advantage of Spectrum-X innovations is the first step towards achieving the goal of adaptation to AI workloads. MAAS and Juju are Canonical open-source automation and infrastructure software. Combined, they enable consistent deployment of network equipment, servers and applications. They remove the burden of having to micromanage each individual component. Moreover, Canonical’s expert staff ensure bugs and security vulnerabilities are fixed. Finally, Ubuntu Pro provides the required certifications to make sure your data centre is compliant with the most stringent security standards.

With several years of experience deploying Smart NICs and DPUs in varied environments, at Canonical we have grown in expertise and have perfected the automation of their operating system configuration, update and integration within the data centre.

BlueField smart NICs provisioning with MAAS and PXE

Since its 3.3 release, Canonical’s MAAS can remotely install the official BlueField operating system (a reference distribution of Ubuntu) on a DPU and manage its upgrades just as with any other server, through the UEFI Preboot eXecution Environment (PXE, often pronounced “pixie”). A parent-child model enables the management of the relationship between the host and its DPUs. As the BlueField OS is an Ubuntu derivative, MAAS can present it as a host for Juju to manage the installation of additional applications, exactly as it does with regular Ubuntu servers.

This way of managing the DPU as a host facilitates a deeper integration between the applications that run on the host and the network, storage and security functions that run on the DPU. It delivers on all the benefits associated with general purpose offloading and acceleration. Still, it is not honed at solving the specific problems of multiple simultaneous AI training workloads.

MAAS provisioning directly via the smart NICs’ BMC

Another method leverages the DPU’s own physical management interface to enhance security and decouple the data centre infrastructure from the workloads it sustains. As a server embedded within a server, the DPU creates an environment where the infrastructure stack can be operated independently of the server, effectively isolating it from untrusted tenant applications. In this approach, software running on the host CPU has no direct access to the DPU. This environment isolation within the DPU facilitates the scenario where a cloud service provider is responsible for managing both networking and storage in the cloud infrastructure stack, which the tenant can use without the risk of interfering with it.

BlueField-2 and BlueField-3 DPUs and Super NICs fitted with a baseboard management controller (BMC) are now available and can be leveraged for this second method. This BMC supports Redfish and IPMI network standards and application programming interfaces (API). Some characteristics of the DPU distinguish it from a regular server and require changes to the usual MAAS workflows. For instance, one cannot turn off a DPU running inside a server. Thus a “cold” reset, expected by some of the procedures, is only possible by power cycling the entire host, which should be avoided. These adaptations to MAAS are currently being implemented and will be available as part of exciting new features in the next release.

By enabling central management and control of smart NICs, the integration of MAAS with Spectrum-X delivers the promise of the next-generation data centre, ensuring it is fit for the purpose of modern AI training and inference workloads for single and multi-tenants.

Conclusion

Canonical is working with partners at the forefront of the data centre infrastructure market and ensuring the best support for cutting-edge features and performance improvements that they offer. Stay tuned for future release announcements of our related infrastructure software and operating systems!

Talk to us today

Interested in running Ubuntu in your organisation?